Legacy processes meet a new cost curve

The pressure is not that AI can do everything, but that it changes the relative cost of coordination, analysis, and content production. That shift quietly breaks assumptions embedded in many operating models.

When the unit cost collapses

Many core processes were designed when analysis was expensive, writing took time, and knowledge lived in individuals rather than systems. AI changes that cost curve. If a first draft, a classification decision, or a case summary can be produced in seconds, then steps built to ration expert time start to look like friction rather than control. The opportunity is not speed for its own sake, but a chance to redesign service levels, error rates, and capacity planning.

In practical terms, organisations are already seeing meaningful differences: a customer query that used to require 12 to 18 minutes of agent handling might be reduced to 5 to 9 minutes with AI-supported triage and drafting. A compliance analyst who used to read 60 documents a week might review 120 with better prioritisation. These are not guaranteed outcomes, but they are large enough to challenge existing staffing models and cycle-time promises.

Why pilots disappoint

Pilots often overlay AI onto existing queues, approval chains, and performance measures. The workflow remains batch-based, the handoffs stay the same, and the risk controls were built for humans producing the work. AI then appears as a helpful assistant, not as a redesign lever. The predictable outcome is a patchwork of local wins, uneven quality, and debates about whether the technology is "ready" rather than whether the process is.

At LSI, the same pattern appears in education: personalisation only becomes real when assessment and feedback are redesigned around continuous improvement, not bolted onto a term-based model. That experience generalises: AI value scales when the organisation is willing to re-specify the process, not just accelerate it.

Processes suited to replacement decisions

Some process components are sufficiently bounded and testable that replacement is feasible without rewriting the whole operating model. The question becomes whether the organisation can define acceptance criteria and maintain traceability.

High-volume, low-discretion casework

AI is most likely to replace work where the objective is stable, inputs are reasonably structured, and exceptions can be escalated. Examples include invoice coding, basic purchase order matching, routine customer identity checks, and first-pass document classification. If the cost per case is currently £8 to £25 and volumes are in the hundreds of thousands, even a 20 to 40 percent reduction in handling cost can fund stronger controls and change management.

The replacement decision hinges on whether exceptions are genuinely rare, or merely hidden in the long tail. A useful test is whether the organisation can describe, in plain language, what "good" looks like and how it will be measured at the case level.

Drafting and summarisation with verifiable sources

Drafting is often a safe target when the output can be grounded in approved sources and validated before use. Think call notes, meeting summaries, RFP responses based on a controlled knowledge base, or internal policy FAQs. Replacement is not about removing humans from communication, but removing blank-page time and increasing consistency.

Where drafting becomes risky is when the organisation treats generated text as truth rather than as a hypothesis. If there is no clear source-of-record, no citation discipline, and no accountable reviewer, automation simply moves errors faster.

Monitoring and detection workflows

In areas such as fraud monitoring, cyber triage, or quality assurance sampling, AI can replace some manual scanning by prioritising what deserves attention. This is replacement in the sense of reallocating human attention, not eliminating it. The key trade-off is between false positives that waste time and false negatives that create loss events. Both have measurable costs, but one also has reputational consequences that are harder to price.

AI in Business: Strategies and Implementation

Artificial intelligence is transforming how modern businesses operate, compete, and grow but its power lies not in blind adoption, but in strategic discernment. This module moves beyond the hype to explore how leaders can make informed...

Learn more

Processes that demand redesign first

Other processes contain judgement, incentives, and trust dynamics that make simple automation brittle. In these areas, AI can still create step-change value, but only after the process is redefined end to end.

Customer service as a relationship system

Contact centres are often treated as throughput machines, measured by average handling time. AI makes it tempting to automate responses aggressively. Yet the process is also a trust system: mishandled vulnerability, complaints, or regulated advice can create outsized harm. Redesign often starts by separating intents: queries that are informational, queries that change a customer’s position, and queries that involve risk or emotion. Different controls, response times, and human oversight apply to each.

A redesigned flow may push routine queries to self-serve, while investing more expert time into complex cases. Paradoxically, cost reduction can come from spending more per case on the cases that matter, while spending almost nothing on the ones that do not.

Decisioning in credit, insurance, and healthcare

Where decisions affect eligibility, pricing, or safety, AI should prompt a redesign of accountability rather than an attempt to remove it. The governance question becomes concrete: who is the accountable owner of the decision logic, who can change it, and what evidence is required? In regulated environments, auditability and explainability are not optional, and the EU AI Act and sector regulators are likely to increase expectations rather than relax them.

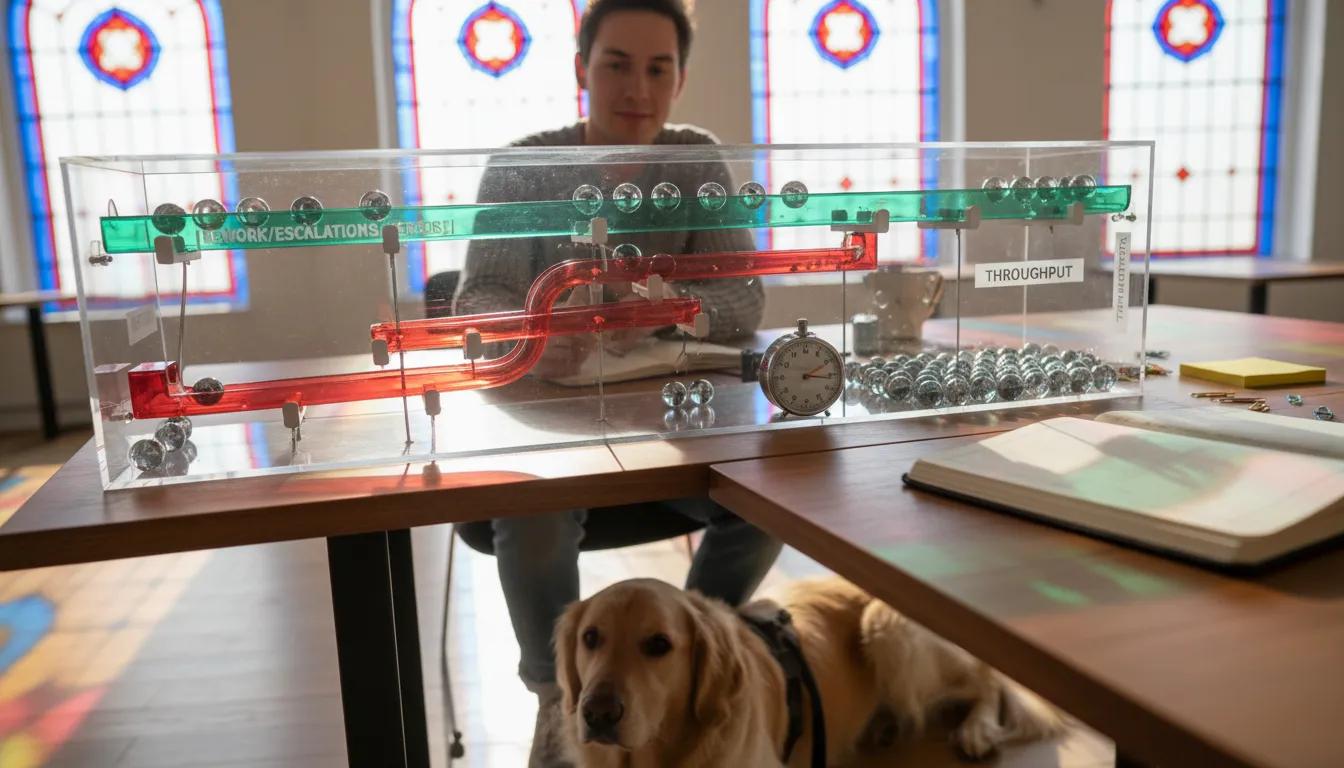

Redesign can include clearer human-in-the-loop points, better documentation of decision rationales, and "rights to challenge" for affected parties. The operational benefit is fewer rework loops and fewer escalations caused by opaque outcomes.

Knowledge work that is actually coordination work

Some of the most expensive "knowledge work" is really coordination: preparing slide decks for alignment, rewriting the same status updates, attending meetings to reconstruct shared context. AI can reduce the production burden, but the deeper redesign is governance: clearer decision rights, fewer committees, shorter feedback loops, and stronger single-thread ownership for outcomes.

If AI increases the volume of content without changing how decisions are made, the organisation can become noisier rather than faster.

Operating model implications for AI-native work

AI-native organisations treat AI as part of the process architecture, with clear accountability, controls, and economic ownership. This typically requires changes to roles, decision rights, and how work moves across functions.

Accountability that survives automation

Replacing tasks with AI can accidentally remove the role that used to be responsible for quality. AI-native redesign tends to introduce explicit accountability for model and workflow performance. This is not only a technical role. It includes process owners who understand cost-to-serve, risk appetite, and customer impact, and who can arbitrate trade-offs when accuracy conflicts with speed.

Central platforms, federated outcomes

Platform choices often benefit from centralisation: shared data controls, logging, identity, model risk management, and vendor oversight. Outcome design is usually better closer to the work: defining what good looks like in claims, procurement, HR, or service operations. The tension is productive when it is explicit. Without it, duplication proliferates, and so does risk.

Cadence from pilots to production

Industrialising AI typically requires a portfolio cadence. Pilots can explore value and feasibility quickly, but production needs operational commitments: service levels, incident response, retraining cycles, and budget lines that survive the next quarter. A practical governance pattern is a lightweight stage gate that asks for evidence on unit economics, control effectiveness, and user behaviour change, not just model performance.

Behaviour change is often the hidden constraint. If incentives still reward manual effort or local optimisation, the process will drift back to its previous shape, regardless of how capable the AI is.

Master's degrees

At LSI, pursuing a postgraduate degree or certificate is more than just academic advancement. It’s about immersion into the world of innovation, whether you’re seeking to advance in your current role or carve out a new path in digital...

Learn more

ROI that includes risk and resilience

Measuring AI value is less about counting time saved and more about capturing changes in throughput, error, loss events, and customer outcomes. Metrics need to support learning without masking harm.

Unit economics as the common language

AI-native redesign benefits from a clear baseline: cost per case, cycle time, rework rate, escalation rate, and the cost of quality failures. Benefits then become legible. For instance, if an operation processes 500,000 cases a year at £12 per case, a 25 percent reduction is £1.5 million of gross capacity value. The net value depends on governance, training, monitoring, and any increase in expert review for high-risk cases.

Leading indicators that predict outcomes

Lagging indicators such as annual cost reduction or NPS move slowly. Leading indicators can reveal whether the redesign is working: percentage of cases handled straight-through, proportion of AI outputs accepted without rework, citation coverage for generated responses, model drift signals, and the time to detect and correct an error pattern. These measures also support regulatory defensibility because they show active control rather than passive hope.

Resilience as a measurable benefit

Resilience is often treated as an abstract virtue. It can be priced. Faster recovery from incidents, fewer single points of failure in expert knowledge, and improved continuity during demand spikes all have value. AI can support that, but only if fallbacks exist and the organisation rehearses them. A process that is 30 percent faster on a good day but fails unpredictably is not an improvement.

Trust boundaries and the right to pause

The most important redesign choices are often about where AI should not act alone. Trust boundaries clarify when human oversight is required and when automation is acceptable, protecting customers, employees, and the organisation’s licence to operate.

Human oversight as a design feature

Human-in-the-loop can be meaningful or cosmetic. Meaningful oversight means the human has time, context, and authority to intervene, and the system records what happened. Cosmetic oversight is a rubber stamp created to feel safe. AI-native design tends to specify oversight triggers: novelty, low confidence, high impact, vulnerable users, or potential discrimination. The trigger design is as important as the model.

Reputational risk is path dependent

Trust is easier to lose than to regain. A single highly visible failure can dominate perception and invite regulatory scrutiny. That argues for selective ambition: moving fastest where impact is bounded and evidence is strong, while being conservative where harm is hard to reverse. It also argues for transparency in internal governance: clear escalation routes, documented decisions, and a culture that can pause or roll back when signals look wrong.

A decision test for replacement versus redesign

If the process were being built today with the same regulatory constraints and customer expectations, would it be designed around queues of human attention or around continuous machine-supported flow with explicit trust boundaries? That question often reveals whether the real opportunity is replacement of tasks or redesign of the whole service.

The uncomfortable question that follows is simpler: what part of the organisation’s identity currently depends on humans doing work that is no longer economically or ethically defensible to keep manual?

London School of Innovation

LSI is a UK higher education institution, offering master's degrees, executive and professional courses in AI, business, technology, and entrepreneurship.

Our focus is forging AI-native leaders.

Learn more